Assignment 5 Report

Intro/Overview

Pitch:

How do we protect our borders, while also ensuring training is efficient, economical, and actually has an impact? Generic Border Force Trainer does all this and more! Provide up to date, efficient, concise training to your employees, or run through different scenarios to provide total coverage of training.

Design Revision

Since Assignment Task 4, our application has changed in a few ways. While the original idea and interactions have mainly stayed the same, the atmosphere and environment of the application have had drastic changes. Firstly, instead of it being a static environment, with no proper goals, you now get the item from NPCs, then do the examination in front of them. The NPCs leave when the examination is complete. Secondly, the application now has simple goals/tasks, instead of being totally undirected. There is now a scoring system, which rewards you with a point when you get the examination correct, and takes a point when it is incorrect. Furthermore, there are now 9 possible different boxes that the user may examine. This is bolstered by the fixing of the x-ray interaction, which now works correctly. On top of these, a few different models have been changed, and the overall feel of the application is now more professional, less thrown together. The actual changes and their impact will be discussed below.

Technical Development

As mentioned above, there have been quite a few changes to the scene since the last assignment. While the overall goals of the program have not changed, the visual and atmospheric aspect has changed greatly, while steps have been made towards more immersion and more interactions. First and foremost, one of the largest technical developments that has taken place is the introduction of an environment that is similar to the real world equivalent. This environment has been filled with models that provide a realistic example of what the actual workers/trainees would be interacting with, and therefore dramatically increase immersion and retention.

The key technical developments of our program are:

The x-ray machine

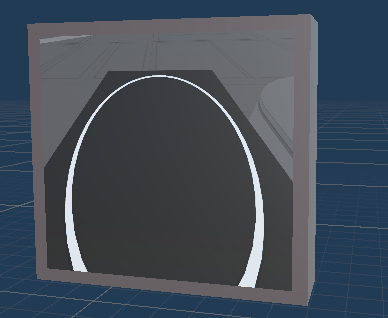

The x-ray machine is created using a camera and a render texture. The render texture displays the output of the camera, which is placed behind the x-ray machine. The render texture uses layer culling to not display certain layers, and therefore, to get the desired effect, it culls the layer that the outside of the box is on.

The Scoring and Acceptance/Rejection Bins

The bin has a script attached that checks whether the item tag is the correct tag associated with the correct decision. Based on whether it is the correct judgement or not, the script either increments or decrements the score counter.

NPCs

The NPC script works by spawning an NPC at the door. The NPC then moves to the preset locations. The script contains a list of objects, which is cycled through when it comes time to spawn the objects to be examined. The script spawns 3 items to 3 spawning positions on the table. If the items are all destroyed (placed in the bins), the NPC spawns a new NPC at the door, while also destroying itself.

The Things Script

This script is placed on the packages that are placed in a bin. Its main use is to increment the count of objects that have been placed in bins, so the spawner can track them. It also holds a boolean based on whether the item is illegal or not.

Score Script

This script handles storing the score. Once the game is completed, it waits 7 seconds and displays the final score as text.

Initial 3D Models

1. Kenney Character Assets: These are used as NPCs. These NPCs are the ones who deliver the packages that you must check. They play a simple walk animation over to the table, then drop the packages off. Three are used in this software.

2. 3D Scifi Starter Kit: We used this starter kit as a base for the table as well as the 3D environment. Whilst it doesn't directly fit 1:1 as border patrol, it works well as temporary scenery.

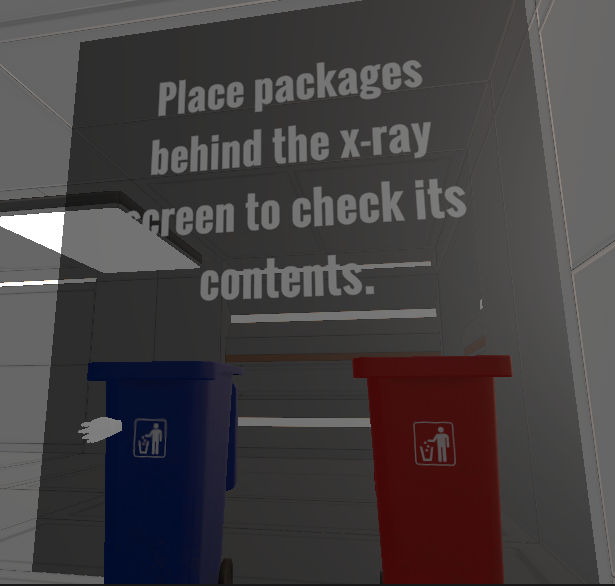

2. Plastic trash bins: These trash bins were used to deposit the packages in game. The red and blue bins were used. These models are static, and are simply receptacles.

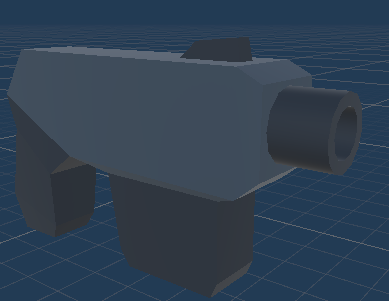

3. Sci-Fi gun: Used as an example of an illegal item.

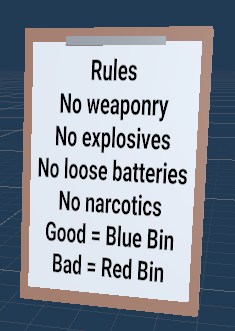

4. Clipboard (Created by us): This is used to give the player some explanation on how to play the game. It is able to be picked up but the main use is just to be read.

5. Package: These packages are the models we used for the packages you must scan and deposit. We chose this simple design as it's obvious to the user what it is. The model inside Unity also has a transparent box inside that so that you can see inside of it.

6. Glasses (Kenney Character Assets): Used as an example of a legal item.

7. Cap (Kenney Character Assets): Used as an example of a legal item.

8. Pistol (Kenney Character Assets): Used as an example of an illegal item.

9. Backpack (Kenney Character Assets): Used as an example of a legal item.

11. Carrot (Low Poly Dungeon): Used as an example of a legal item.

12. Monitor: This monitor is simply built with a camera, render texture and cube, The camera is behind monitor, displaying what it sees via the render texture. You can see the render shows a downward facing view. This view actually allows you to see through any boxes placed in front of the camera.

Overview of Scene with Images

The scene here is split into several different parts. The overview of this section is that it serves two purposes. The black wall with text shows the scoreboard/game progress, and the bins are here to be used to deposit items.

This is the other section of the scene. There are once again two parts here. The machine on the left is the x-ray machine. Here, you can place items in front of the camera to make them transparent, allowing you to judge the contents. On the right, you can see the table in which the boxes are placed on. This is the area the NPCs move to. It also contains the clipboard so the user can learn the basic rules.

Usability Testing

Design and Plan

When designing and outlining the testing for the application, we decided to hold important what had been reinforced the entire semester: human-like behaviour. We prioritised human-like behaviour because we believe that, if measurable, it is the best metric for knowing whether an application is effective in its use. As most of the other design choices are cosmetic in some way, they can be adapted and modified to fit the needs of the application, and also be easily modified with user feedback. The interactions, on the other hand, are the principal mechanics of the application, and also one of the main reasons for the use of VR in the first place. If these are not effective, human-like, and intuitive, then the whole application falls apart. Therefore, we decided to test a few things that relate to these human-like interactions, and the environment as a whole. Most of our questions are on a scale of 1-5, which gives the testing a comparable baseline between testers, while not being individual stats due to the low number of testers. The testing also asks the testers a similar question before and after the testing, to get a general gauge of the expectations versus the reality of the realism of the application.

Recruitment

During the recruitment process of our testing, it was difficult to find willing participants, let alone participants that fit our target demographic. We managed to get 7 friends and family to participate, and of these 7, 3 were willing to “role-play” being a border inspection officer. These testers ranged from ages 19-54, and came from a wide variety of backgrounds and demographics.

Methods

Our testing is split into three parts: pre testing questions, testing evaluation, and post testing questions. For the realism question, the objectives of the application in relation to the real-world counterpart were explained.

Pre-testing Questions:

On a scale of 1-5, how realistic do you think the objectives will be?

Is there anything you don’t understand/what are you expecting?

Have you ever used VR before?

Testing Evaluation:

Did the tester figure out the interactions easily enough? (basic controls were explained)

Were they accurate in judgements?

What mistakes were made?

Post-testing Questions:

On a scale of 1-5, how realistic were the objectives for you?

On a scale of 1-5, how intuitive did you find the interactions?

On a scale of 1-5, how easy-to-use did you find the application?

Results - * indicates a role-player

Tester | Pre-test realistic | Post-test realistic | Ever used VR | How easy-to-use? | How intuitive? |

1* | 2/5 | 3/5 | No | 3/5 | 3/5 |

2 | 1/5 | 3/5 | No | 2/5 | 2/5 |

3* | 3/5 | 4/5 | No | 4/5 | 3/5 |

4 | 2/5 | 2/5 | Yes | 2/5 | 2/5 |

5* | 3/5 | 4/5 | No | 4/5 | 3/5 |

6 | 1/5 | 3/5 | No | 3/5 | 4/5 |

7 | 3/5 | 4/5 | No | 3/5 | 3/5 |

Findings

The above table shows the most relevant data in relation to our metrics that we wanted to measure. It can be seen quite easily that, despite 3 of the testers role playing a border security officer, expectations before the testing were low. This could be correlated to the fact that all but 1 of our testers had never used VR before, but even the VR user didn’t have high expectations. Furthermore, the evaluations done during the testing show that most testers were able to navigate and understand the virtual world relatively easily. 3 testers struggled to pick up the box, but once another explanation of the controls was had, they managed to easily figure out the goals in the virtual space. The placing of the package in the correct bin seemed to be quite easily grasped, and all testers were able to get the packages in the bins in due time (some allowances were made for the fact that almost everyone was using VR for the first time). This also seems to be backed up by the table of data, which shows an above average score for the intuitive scale. This could also be skewed by the use of a 1-5 scale, which doesn’t allow for a middle of the line score.

Addressing Results

The results are generally positive in our direction, but, as mentioned above, this can be skewed by a number of factors. The main issue to address would be the intuitiveness of the interactions. While the results are slightly in our favour, we need the intuitiveness to be at an average of 4 at least. This is the main force behind our application, and this needs to be as close to perfect as possible for it to be effective in the training it is trying to teach.

References:

Furness, T.A. (2001) ‘Toward tightly coupled human interfaces’, Frontiers of Human-Centered Computing, Online Communities and Virtual Environments, pp. 80–98. doi:10.1007/978-1-4471-0259-5_7.

BARON, P. & CORBIN, L. 2012. Student engagement: Rhetoric and reality. Higher Education Research and Development, 31, 759-772.

Kenney character assets by Kenney, Kay Lousberg (no date) itch.io. Available at: https:// kenney.itch.io/kenney-character-assets (Accessed: 08 October 2023).

3D scifi kit starter kit: 3D environments (no date) Unity Asset Store. Available at: Ittps://assetstore.unity.com/ packages/3d/environments/3d-scifi-kit-starter-kit-92152 (Accessed: 08 October 2023).

Yughues Free Bombs (2015), Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/props/weapons/yughues-free-bombs-13147

Ultimate Low Poly Dungeon (2022), Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/environments/dungeons/ultimate-low-poly...

Cardboard Boxes Pack (2015), Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/props/cardboard-boxes-pack-30695

Plastic trash bins: 3D exterior (no date) Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/props/exterior/plastic-trash-bins-16077... (Accessed: 27 October 2023).

Sci-Fi Gun: 3D (no date) Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/sci-fi-gun-30826 (Accessed: 27 October 2023).

Lunar Landscape 3D (2019) Unity Asset Store. Available at: https://assetstore.unity.com/packages/3d/environments/landscapes/lunar-landscape... (Accessed: 27 October 2023).

Files

Get KIT208 AT4/5 - Border Force Trainer

KIT208 AT4/5 - Border Force Trainer

| Status | Prototype |

| Author | joshuaa2 |

| Genre | Simulation |

More posts

- Assignment 4 ReportOct 08, 2023

Leave a comment

Log in with itch.io to leave a comment.